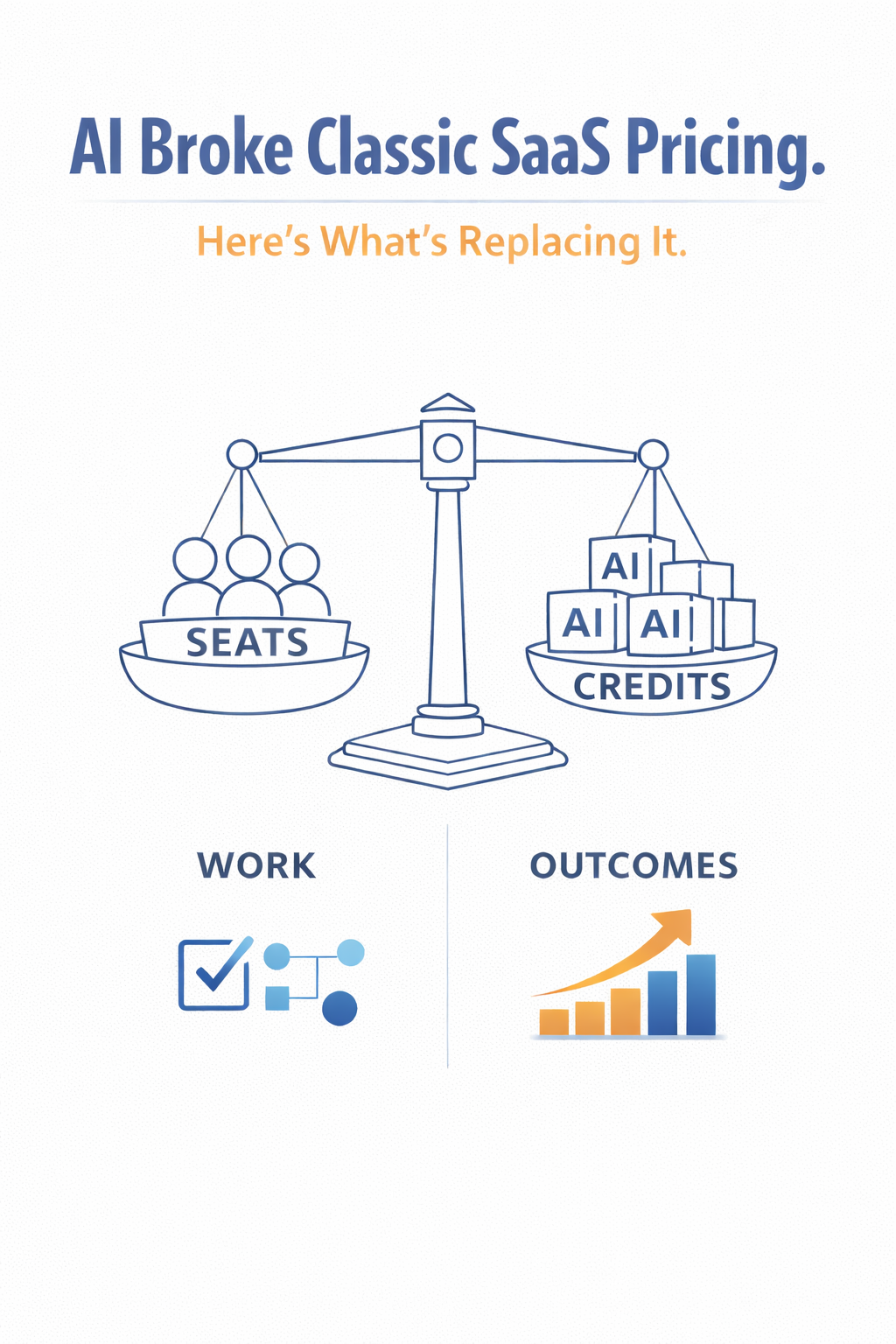

AI Broke Classic SaaS Pricing. Here’s What’s Replacing It.

SaaS is evolving fast. From the investor seat, one pattern keeps showing up: classic SaaS pricing breaks in AI. Founders are adapting with new business models, and we’re learning from those experiments in real time.

Quick note: I’m talking about AI applications, the workflow products sitting on top of models (not the model labs themselves).

The simple reason: AI brought marginal cost back (even for apps)

Traditional SaaS leaned on two assumptions:

Seats ≈ value

Marginal cost ≈ ~0

AI apps break both.

A single power user can trigger huge variable costs. Usage is spiky. And while customers still expect SaaS-like predictability, you’re paying for “intelligence” as a variable input (inference, tool calls, orchestration, sometimes humans-in-the-loop).

Rule of thumb: price in the customer’s unit of value, manage cost in the model’s unit of compute.

The bigger shift: software is becoming a work engine

Customers don’t want “AI features.” They want work done:

tickets handled

documents processed

calls summarized

leads qualified

policies checked

reports generated

workflows completed

So the monetization question becomes: what’s your unit of work/value?

That’s what’s replacing “per seat, forever.”

The new monetization menu for AI applications

1. Work-based consumption (credits, actions, runs)

Unit: credits, actions, workflow runs, documents, minutes analyzed, conversations handled.

Why it works: matches value and scales with demand.

Where it breaks: “bill shock” + budgeting friction.

Investor lens: great if cohorts expand and margins don’t implode.

Internally, you can translate this into tokens/compute. Externally, sell “work units.”

2. Seat + allowance (the hybrid default)

Unit: per-seat baseline + included monthly allowance (credits/runs) + overages.

Why it’s winning: predictability for buyers, upside for you.

Where it breaks: confusing packaging, wrong allowance levels, surprise overages.

Investor lens: expansion is good; surprise billing is fatal.

3. Outcome-based pricing (pay for results)

Unit: ticket resolved, appointment booked, revenue recovered, SLA met, churn reduced.

Why it’s powerful: tight ROI story, supports premium pricing.

Where it breaks: attribution fights, measurement disputes, longer sales cycles.

Investor lens: love the ambition, if outcomes are defined, measurable, and bounded by clear guardrails.

4. Agent pricing (pay per task / workflow completion)

Unit: completed task, autonomous workflow, “job” successfully executed.

Why it’s emerging: buyers want “done,” not more tools.

Where it breaks: retries, partial success, edge cases, what counts as “complete”?

Investor lens: reliability becomes a moat: orchestration, evals, QA, exception handling.

5. Subscription tiers with limits (simple plans, controlled usage)

Unit: monthly fee with caps (runs, speed, context size, features).

Why it works: simple GTM, procurement-friendly.

Where it breaks: margin volatility if limits and routing aren’t engineered.

Investor lens: watch gross margin discipline and cohort retention by tier.

6. Platform / marketplace take-rate (ecosystem monetization)

Unit: rev share, usage share, distribution fees, templates/extensions marketplace.

Why it matters: once others build on you, you gain leverage.

Where it breaks: cold start + governance + quality control.

Investor lens: platform pull must be real, not “we launched an app store.”

The part founders underestimate: gross margin is now a product decision

In AI apps, you don’t “end up” with good margins, you design them.

Your knobs:

model routing (cheap vs premium)

caching / batching

context discipline

retrieval quality (less waste, better answers)

guardrails (prevent runaway usage)

“when not to use AI”

This is product strategy, not a spreadsheet problem.

Pricing is now a trust contract, not just packaging

In classic SaaS, pricing was mostly a sales decision.

In AI apps, pricing is a product experience. You’re implicitly promising:

predictability (budget control)

accountability (what gets delivered)

governance (what the system will and won’t do)

If customers can’t understand their bill, they won’t trust your product, no matter how good the demo is.

How we evaluate AI application business models

When we underwrite an AI app, we usually ask:

What’s your atomic unit of value?

Access, consumption, work, or outcomes?Does pricing align with your cost curve?

Are your best customers also your worst margins?Do cohorts expand and stick?

Not just revenue expansion, workflow embedding.Does your model match how your buyer budgets?

Enterprise needs predictability; some segments tolerate variability.Where’s the moat if models commoditize?

Workflow embedding, distribution, reliability, trust, domain depth, integrations, more than “we use the best model.”

A simple framework: choose your unit, then stabilize it

If value is access → seats/subscription

If value is consumption → credits/actions/runs

If value is work delivered → tasks/agents/workflows

If value is business impact → outcomes

Most strong companies do two moves:

pick one primary unit

add one stabilizer (allowance, caps, tiers, clear overage policy) + great transparency

I’m genuinely curious

If you’re building an AI application:

What do you believe your true unit of value is: access, consumption, work, or outcomes?

How are you avoiding “bill shock” while still capturing expansion?

Are you at risk of a business where your best customers become your worst margins?

And if model costs drop 10x in 24 months… does your pricing still make sense?

I’m collecting patterns, and I’d love to learn how you’re thinking about it.